3.6 Cilium Service Mesh

State/Version: 20250706

3.6 Cilium Service Mesh 💡

In a Kubernetes environment, Cilium acts as a networking plugin that provides connectivity between pods. It provides security by enforcing network policies and through transparent encryption, and the Hubble component of Cilium provides deep visibility into network traffic flows.

Thanks to eBPF, Cilium’s networking, security, and observability logic can be programmed directly into the kernel, making Cilium and Hubble’s capabilities entirely transparent to application workloads. These will be containerized workloads in a Kubernetes cluster, though Cilium can also connect traditional workloads such as virtual machines and standard Linux processes.

3.6.1 Capabilities

Networking 🌐

Cilium provides network connectivity, allowing communication between pods and other components (within or outside a Kubernetes cluster). It implements a simple flat Layer 3 network that can span multiple clusters and connect all application containers.

By default, Cilium supports an overlay networking model, where a virtual network spans all hosts. Traffic in an overlay network is encapsulated for transport between different hosts. This mode is chosen as the default because it requires minimal infrastructure and integration and only IP connectivity between hosts.

Cilium also offers the option of a native routing networking model, using the regular routing table on each host to route traffic to pod (or external) IP addresses. This mode is for advanced users and requires awareness of the underlying networking infrastructure. It works well with native IPv6 networks, cloud network routers, or pre-existing routing daemons.

Identity-aware Network Policy Enforcement 🚚

Network policies define which workloads are permitted to communicate with one another, securing your deployment by preventing unexpected traffic. Cilium can enforce both native Kubernetes NetworkPolicies, and an enhanced CiliumNetworkPolicy resource type.

Traditional firewalls secure workloads by filtering on IP addresses and destination ports. In a Kubernetes environment, this requires manipulating the firewalls (or iptables rules) on all node hosts whenever a pod is started anywhere in the cluster to rebuild firewall rules corresponding to desired network policy enforcement. This doesn’t scale well.

To avoid this situation, Cilium assigns an identity to groups of application containers based on relevant metadata, such as Kubernetes labels. The identity is then associated with all network packets emitted by the application containers, allowing eBPF programs to efficiently validate the identity at the receiving node – without using any Linux firewall rules. For example, when a deployment is scaled up, and a new pod is created somewhere in the cluster, the new pod shares the same identity as the existing pods. The eBPF program rules corresponding to network policy enforcement do not have to be updated again, as they already know about the pod’s identity!

While traditional firewalls operate at Layers 3 and 4, Cilium can also secure modern Layer 7 application protocols such as REST/HTTP, gRPC, and Kafka (in addition to enforcing at Layers 3 and 4). It provides the ability to enforce network policy corresponding to application protocol request conditions such as:

- Allow all HTTP requests with method GET and path /public/.*. Deny all other requests.

- Require the HTTP header X-Token: [0-9]+ to be present in all REST calls.

Transparent Encryption 🛂

In-flight data encryption between services is now a requirement in many regulation frameworks such as PCI or HIPAA. Cilium supports simple-to-configure transparent encryption, using IPSec or WireGuard, that when enabled, secures traffic between nodes without requiring reconfiguring any workload.

Multi-cluster Networking 🔀

Cilium’s Cluster Mesh capabilities make it easy for workloads to communicate with services hosted in different Kubernetes clusters. You can make services highly available by running them in clusters in different regions, using Cilium Cluster Mesh to connect them.

Load Balancing 🔀

Cilium implements distributed load balancing for traffic between application containers and external services. As you’ll see later in this course, Cilium can fully replace components such as kube-proxy and be used as a standalone load balancer. Load balancing is implemented in eBPF using efficient hash tables, allowing for almost unlimited scale.

Enhanced Network Observability 🔍️

While we’ve learned to love tools like tcpdump and ping, which will always find a special place in our hearts, they are just not up to the task of troubleshooting networking issues in dynamic Kubernetes cluster environments. Cilium strives to provide observability tooling that lets you quickly identify and fix cluster networking problems.

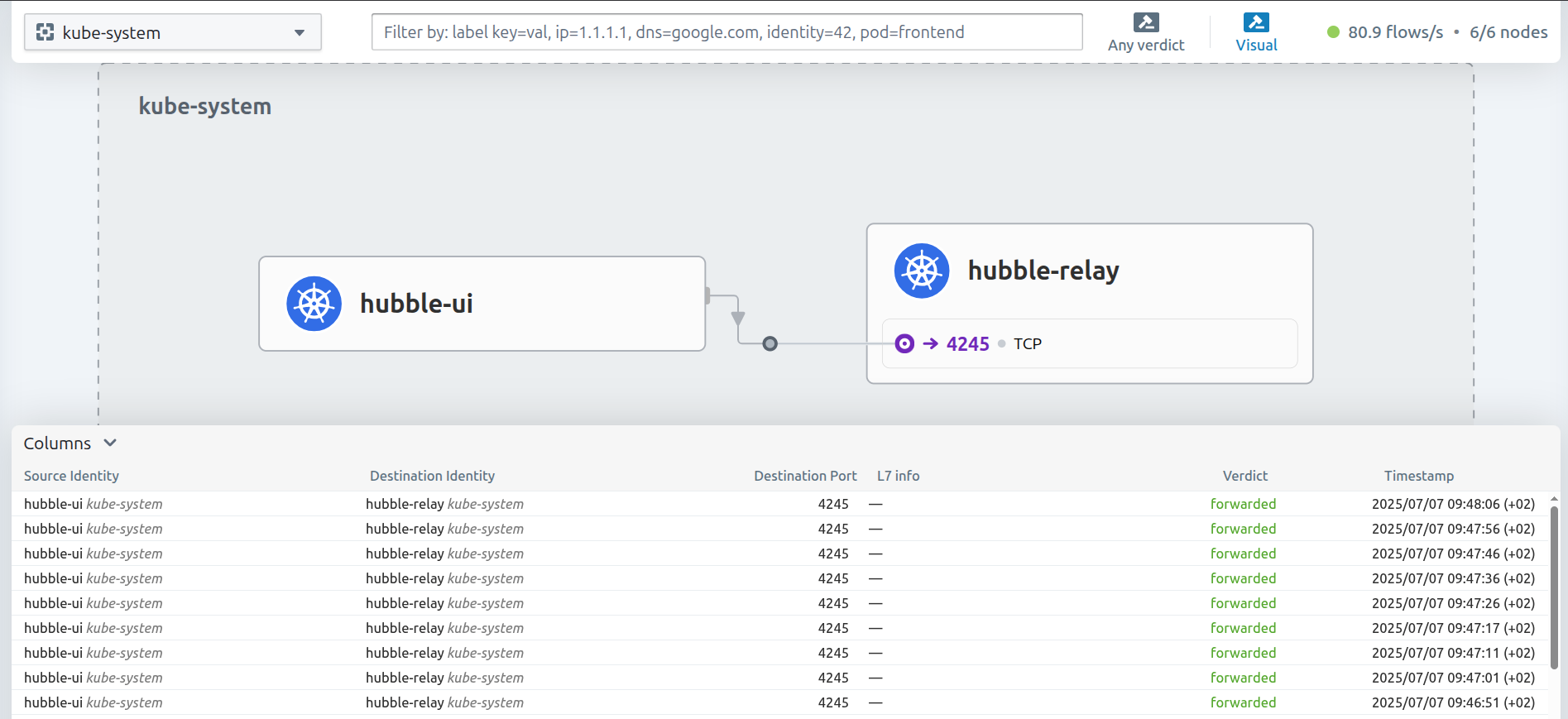

Towards that end, Cilium includes a dedicated network observability component called Hubble. Hubble makes use of Cilium’s identity concept to make it easy to filter traffic in an actionable way and provides:

- Visibility into network traffic at Layer 3/4 (IP address and port) and Layer 7 (API Protocol).

- Event monitoring with metadata: When a packet is dropped, the tool reports not only the source and destination IP but also the full label information of both the sender and receiver, among other information.

- Configurable Prometheus metrics exports.

- A graphical UI to visualize the network traffic flowing through your clusters.

Hubble UI

You’ll get more hands-on experience with Hubble in Chapter 5.

Prometheus Metrics

Cilium and Hubble export metrics about network performance and latency via Prometheus so that you can integrate Cilium metrics into your existing dashboards.

Prometheus Metrics